Machine Learning a Manifold

My first paper on machine learning has been accepted in Physical Review D! Let me write a quick explainer on this work.

Neural networks have revolutionised research in particle physics. They are used for a host of applications. We had a curiosity: could machine learning techniques be used to identify the presence of a symmetry in a dataset?

First — what do I mean with a symmetry? Everyone will be familiar with symmetries in nature: even our faces are (nearly) symmetric. To a particle physicist, this same concept can be thought of more abstractly: something is symmetric if it is unchanged under a symmetry operation:

\[f ({\rm new}) = f ({\rm old})\]

For example, if I flipped the left and right side of your face (as zoom might do!), you’d nearly look the same. With this abstraction, there are more complicated symmetry transformations you might consider. I wrote a blog post about it here.

We considered a symmetry called SU(3): the special unitary group of rank 3-1=2. Why is this one interesting? Well, it’s a low-dimensional symmetry which still has a rich structure. Making it tricky to identify, and hard to distinguish from another symmetry, called SO(8).

Now, we were particularly interested in Lie algebras. For our purposes, what that means is that we are using very small symmetry transformations:

\[ \rm old \, field \rightarrow new \, field = old \, field + \epsilon \times transformation \]

where \( \epsilon \) is small — so small that we do not care about things like \( \epsilon^2 \).

Then, if a function is symmetric, \(f ({\rm new \, field}) = f ({\rm old\, field})\), at least to order \( \epsilon \):

\[ f({\rm old \, field}) \rightarrow f ( { \rm new \, field}) = f({ \rm old \, field}) + \mathcal{O}(\epsilon^2), \]

i.e. the order \( \epsilon \) is gone (but we could still have the orders we ignored, \( \epsilon^2 \) and above). On the other hand, if our function was not symmetric, we would also have still have \( \mathcal{O}(\epsilon) \)!

But now we’ve got to figure out how to transform our dataset. And that’s where the neural network comes in: it can be used as a really good interpolator. So it finds \(f ({\rm new\, field}) \) for us, and really quickly too.

Then all we’ve got to do is see if \(f ({\rm new\, field}) – f ({\rm old\, field}) = \mathcal{O}(\epsilon^2) \), and we’ve identified the presence of a symmetry. But we can use this in reverse too: if \(f ({\rm new\, field}) – f ({\rm old\, field})\) leads to \(\mathcal{O} (\epsilon) \), the symmetry must be absent.

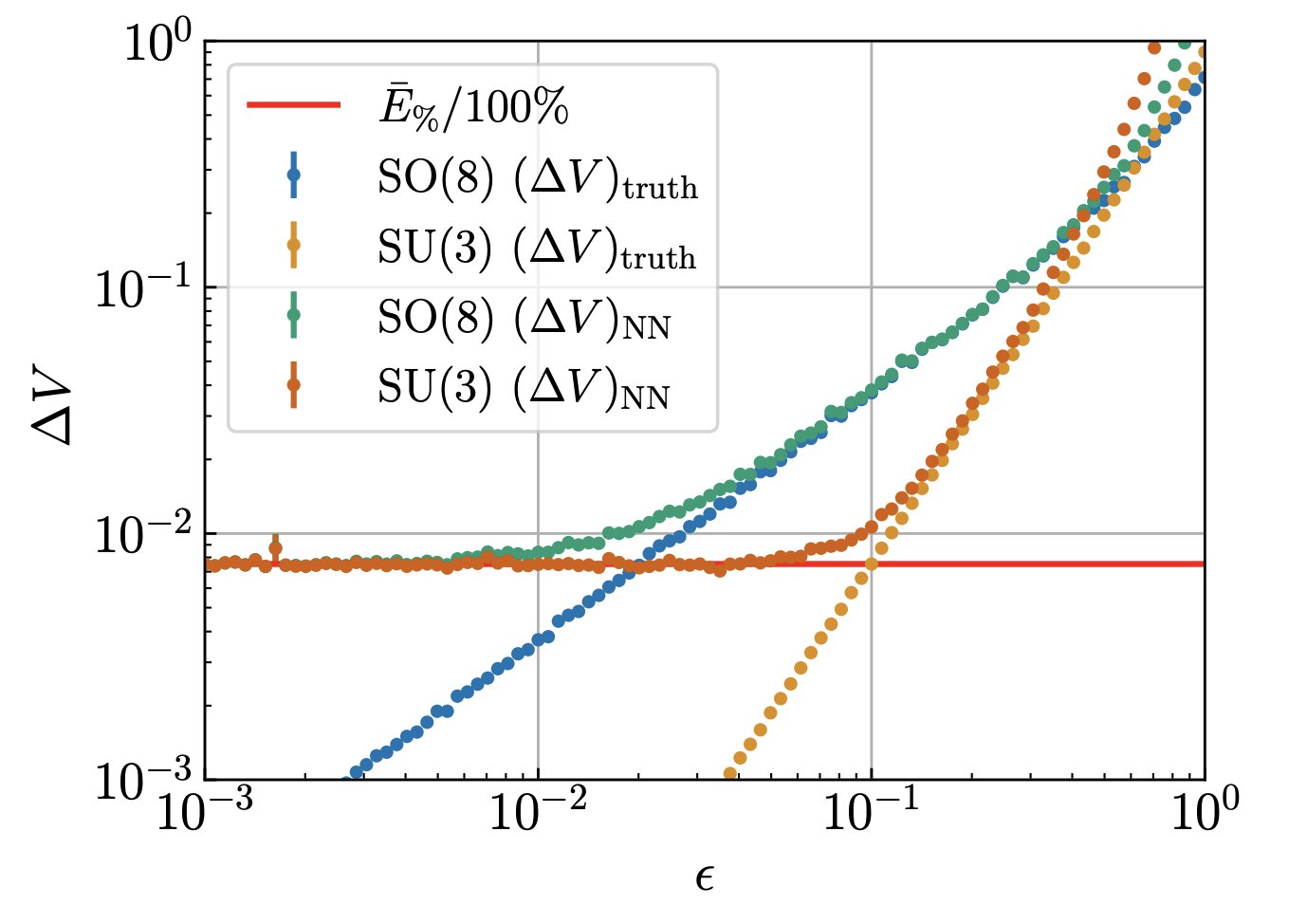

Here’s an example plot showing linear \( \epsilon \) scaling for the absent symmetry SO(8), and NO linear \( \epsilon \) scaling for the symmetry that’s present, SU(3). We show an idealised case (“truth”) as well as the output from our trained neural network (“NN”):

There’ve been a few other interesting suggestions for the problem of identifying a symmetry, but what’s nice about our proposal is that it can be used to show the absence of one too.

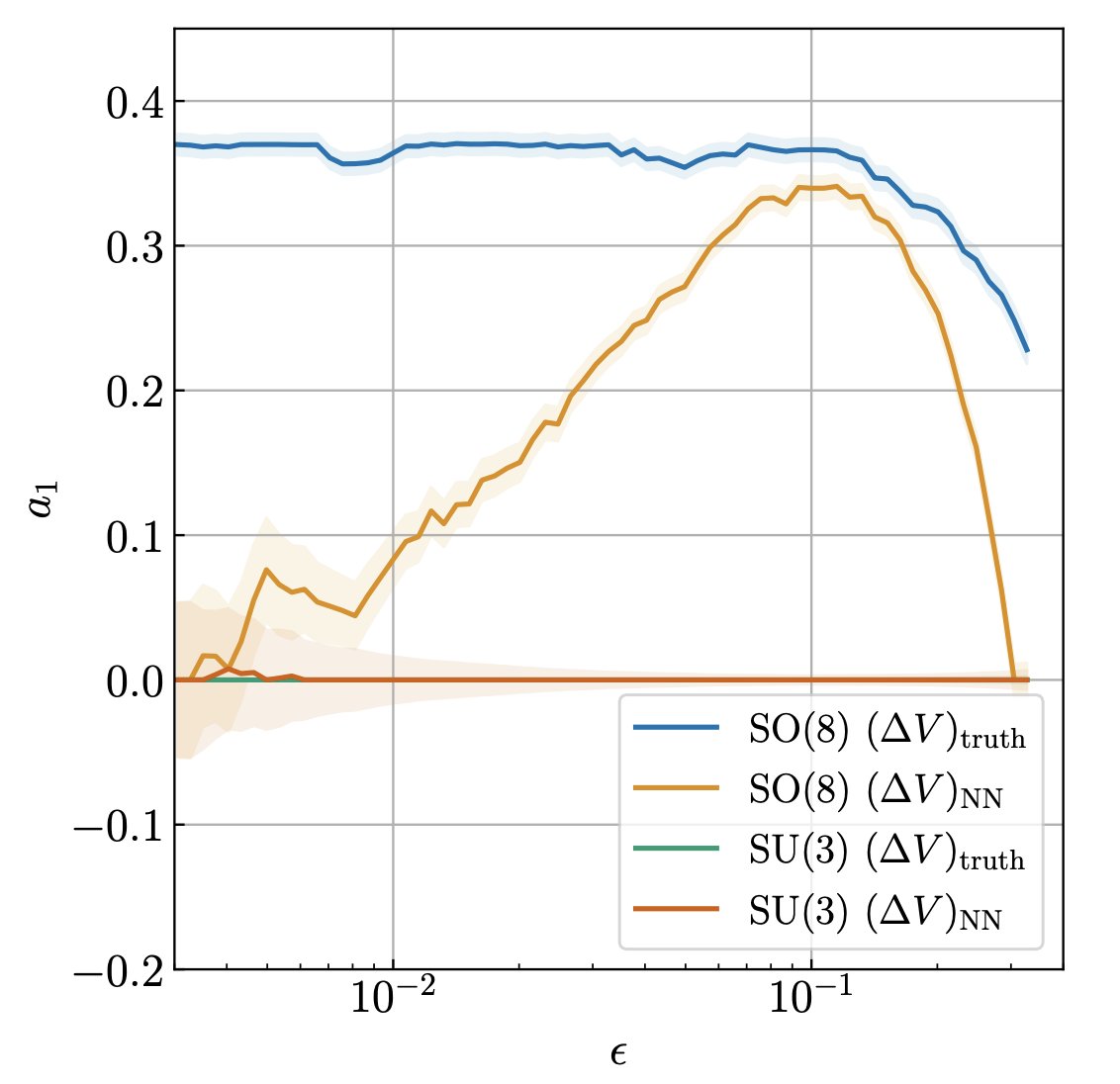

In this plot: linear scaling means \( a_1 \) is nonzero — that is clearly true for the absent SO(8).

It’s been fun to do some out-of-the-box thinking about neural networks and particle physics! We’ve got some related ideas that we’ll be working on in the next few months. Thanks to my brilliant collaborators and congratulations to Sean for his first peer-reviewed paper!